After a few more failed attempts to print the RC case with ABS I finally gave PLA a chance. Ordered some black 1.75 filament from amazon and a few days later I printed the case successfully from the first try. PLA is great, it’s easy to print, it smells like sugar when printing – as opposed to the chemical smell of ABS – and the quality is really good. However it doesn’t like to be sanded. At all! It’s like trying to sand rubber – or more accurately – sugar.

I decided to stop worrying about the finish so much and ordered some plastic primer and white matte paint. In the meantime I finished the RC PCB and made all the connections and did the first real test.

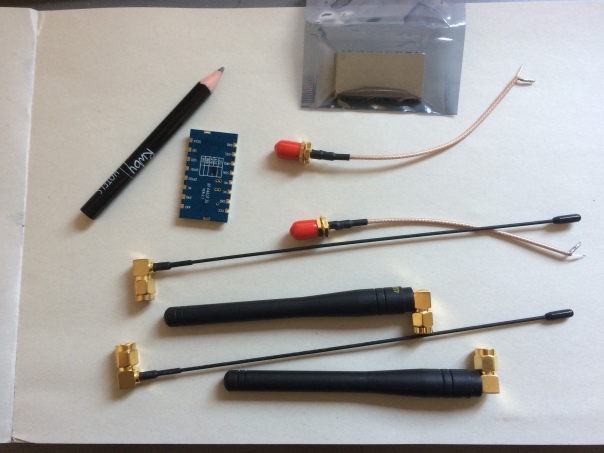

Here it is:

Features:

- 3 axis gimbal for yaw, pitch and roll. It’s a very high quality one with bearings and hall sensors instead of pots

- Motorized linear pot for the throttle. I went for motorized because when changing flight modes I want to have the throttle in the correct position to avoid stopping the motors

- 0.96″ OLED screen for status info, calibration and other things

- 8 ADC channels – 4 for the sticks, 3 for the individual LiPo cells and another one for the Gimbal Pitch pot

- a 4×4 button matrix implemented with pigpio for all the buttons and switches

- 2 rotary encoders for live editing of parameters like PIDS, menu navigation, etc

- 2x 2.4 GHZ wifi diversity for the video feed

- 5.8 GHZ wifi for the phone connection – to send the video feed through

- 433 Mhz 30 dBm link for the RC data

- 2.2Ah 3S LiPo battery for ~5h of continuous use, with charger/balancer port

The screen is connected through i2c1 at 1Mhz together with 2 ADC sensors (ADS1115).

I’m using this library to talk to the screen but noticed that a full display update takes ~20-30ms during which I cannot talk to the ADC sensors. To fix this, I changed the library and implemented partial screen updates. Now I can call screen->displayIncremental(1000) and the class will send incremental lines to the screen for 1000 microseconds (1 millisecond). The overall FPS is the same as with full updates but I get to do other things while the display is being updated. To avoid tearing I also added double buffering to the class and an explicit swap method.

The end result is a 40-45 screen updates per second but each update is split in 12-13 partial uploads with ADC readings in the middle. So I can sample the ADC at ~600Hz which is more than enough for a RC system.

The RC has 13 buttons and 2 rotary encoders requiring a total of 17 GPIO. Since I didn’t have enough I ended up grouping the 13 buttons in a matrix of 4×4 following this tutorial. This allows me to reduce the number of GPIO to 12 (8 for the matrix and 4 for the rotary encoders). I implemented the matrix reading using PIGPIO and added some debounce code to avoid detecting ghost presses/releases. Seems to work great and it’s very fast.

Most of the HW is done and it’s a mess of wires. I’m working now on some videos of potting it together, making the connections and the calibration.

The next step is to work on the phone app to receive the video feed, although I think I will give the quad a test – line of sight.

I really want to fly soon.